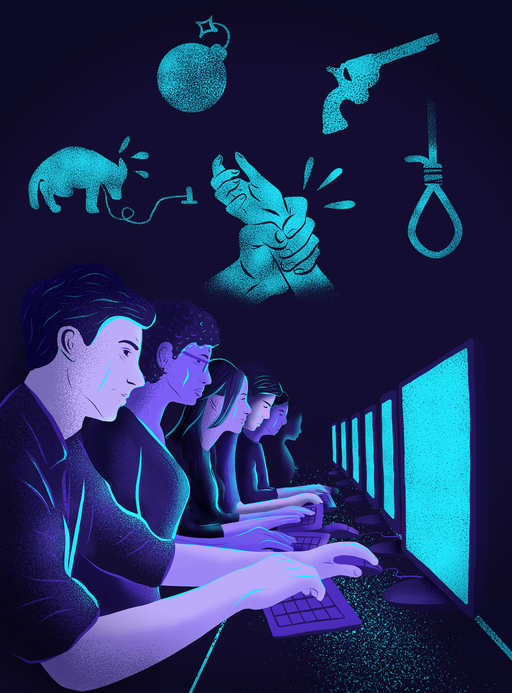

Inside the murky world of social media moderation

More and more social media content moderators are coming out with horror stories about their workplaces. One writer takes a look behind the curtains of what it's like moderating for big tech

When I would report hate speech, violence or abuse on social media, I’d imagine the content whirring off into some vast digital matrix, circulating through loops of code and wires. We tend to forget, when constantly fed news from Silicon Valley tech bros applauding their apps’ unique algorithms, that while back-end social media practices are mostly automated, the most traumatising work of moderating these sites is still carried out by employees. With our increased reliance on the internet, maintaining this humanity in content moderation – providing depth, nuance and empathy that technology cannot – is vital. But at what, very human, cost?

Over recent years, horror stories from content moderators have surfaced despite the slew of non-disclosure agreements (NDAs) and a code of silence engulfing those moderating for big tech. Last year, Casey Newton drew back the curtain to reveal the murky, unknown world of Facebook’s content moderation. In detailing moderators’ experiences at a contracted site in Arizona, Newton’s piece shocked the world. It presents a decidedly dystopian picture of a workplace and global landscape of content moderation 'perpetually teetering on the edge of chaos.' Some moderators had panic attacks after repeatedly viewing footage depicting violence, animal abuse or suicide, while others slept with guns by their bedside. Since then, more and more content moderators have emerged from social media’s slicked-back world – presented as one of big money, big dreams and big change – with similar stories of being overworked, underpaid and traumatised by the mental and physical strain of their roles.

Earlier this year, Facebook was forced to pay a giant $52m settlement to 50,000 current and former content moderators whose time spent sifting through endless images and videos of violent content and hate speech left them dealing with PTSD and a range of mental health issues. YouTube is now also being sued by a former employee who claims the company failed to safeguard employees’ mental health with understaffing resulting in employees moderating harmful and disturbing video content for more than four hours at a time – the amount limited by YouTube’s best practices. Investigations even found that YouTube specifies in employment contracts that the job could give them PTSD in an attempt to avoid such lawsuits.

This year, the pandemic only exacerbated health and safety risks for those performing the relentless task of playing catch-up to an insurmountable tide of reported, harmful content; whether from home or when urged to risk their own wellbeing, and that of their families, to return to ill-equipped moderation offices. For content moderators like the anonymous Facebook moderators who took to Medium in April to express solidarity with a boycott of the platform amid Black Lives Matter protests – as Mark Zuckerberg stood idly by as racism and far right hate speech flourished on Facebook – the necessity of proper employment rights is clear. “At the moment,” they wrote, “content moderators have no possibility, no network or platform or financial security – especially when we are atomised in a pandemic and remotely micromanaged – to stage an effective walkout without risking fines, our income and even our right to stay in the countries where we live and work.”

The question of how we solve a problem like content moderation is undoubtedly a difficult one, as matters of human interest and accountability are dwarfed by the profit-driven, protectionist motives of big tech. “I think it all comes down to transparency,” says Carolina Are, an online inequalities and moderation researcher at City, University of London, who studies algorithm bias, online abuse and social media. “Through clarity and transparency, hopefully we will have more standard employment practices for moderators who may use employment tribunals etc.” Not only would this help moderators unionise “so that they can stop being mysterious, sometimes exploited workers behind the scenes,” she says, but it could restore user trust in opaque, confusing decisions made according to in-platform policies.

In April, leading academics at The Alan Turing Institute argued that “content moderators should be recognised as ‘key workers,’ given financial compensation and mental health support reflecting the difficulties and importance of their roles, and enabled to work flexibly with privacy-enhancing technologies.” This echoes demands made by ex-employees suing the social media sites they once moderated. Foxglove, based in London, are helping content moderators challenge the third party companies tasked with moderating for big tech. Their support for content moderators across Europe has already increased awareness of workplace conditions for moderators, which Foxglove says could be mitigated by “better technology, more reasonable targets, proper psychological support, and higher pay.”

Facebook has previously called their content reviewers “the unrecognized heroes who keep Facebook safe for all the rest of us,” but with an increase in hateful content erupting across social media, content moderators are left traumatised and silenced by NDAs. Over the last decade, the sites and tools built to connect us have in fact helped to tear ourselves and each other apart. But it doesn’t have to be this way. As Are says, “until governments get involved to ensure platforms pay more attention to fairness and clarity, we'll keep having the same problems.” Until then, moderators are the internet’s last safeguard.

Illustration by Monika Stachowiak